Multimodal Datasets with Controllable Mutual Information

Generating highly multimodal datasets with explicitly calculable mutual information between modalities.

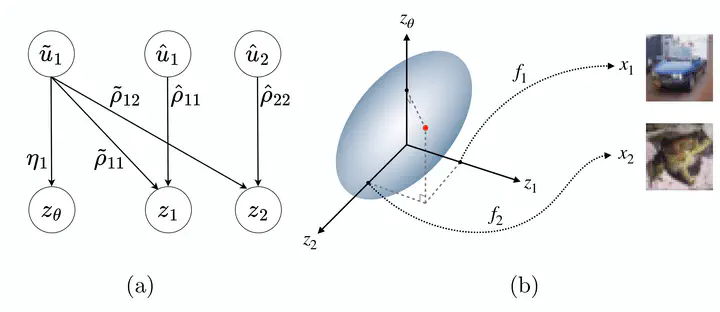

We introduce a framework for generating highly multimodal datasets with explicitly calculable mutual information between modalities. This enables the construction of benchmark datasets that provide a novel testbed for systematic studies of mutual information estimators and multimodal self-supervised learning techniques. Our framework constructs realistic datasets with known mutual information using a flow-based generative model and a structured causal framework for generating correlated latent variables.

For more information, please see our paper.